About MLOps Community and YouGot.us Research

YouGot.us Research is a distinguished organization focusing on AI/ML and Data Science industries. Known for its thorough data research projects and perceptive analyses, it stands as a premier resource for research initiatives in the emerging Generative AI business place. The MLOps Community, a pivotal partner of YouGot.us, stands at the forefront of addressing the growing importance of MLOps, distinct yet parallel to DevOps, by facilitating the exchange of real-world best practices among professionals in the arena.

The Questionnaire

The Event

MLOps Community hosted a worldwide, two-day virtual web event with 100 speakers and over 5000 attendees about AI in Production with a focus on Large Language Models on February 15th and 22nd, 2024.

Large Language Models (LLMs) have revolutionized the tech landscape, sparking curiosity about their practical applications and the hurdles involved in deploying them. During this MLOps.Community event, experienced practitioners shared insights on navigating challenges such as cost optimization, meeting latency demands, ensuring output reliability, and effective debugging strategies. Participants had the chance to attend workshops designed to equip them with the knowledge to establish their use cases efficiently, bypassing common obstacles. More information: https://home.mlops.community

Introduction and Methodology

The survey was planned and conducted by Martin Stein, representing the YouGot.us Research Project, and Demetrious Brinkmann, Founder of MLOps Community. Our goal was to learn more about the rapidly evolving landscape of Large Language Models and particularly the state of LLM evaluation. As a result, we decided to conduct an exploratory online survey (Computer-Assisted Web Interview) and invited conference attendees to participate during the two-day web event. Convenience and cost-effectiveness were the main drivers for choosing CAWI over other forms of research.

The questionnaire was structured into three areas: (1) General Questions, (2) Level of LLMs Usage, and (3) State of LLM Evaluation. We used a screening question to determine the respondents’ interest in LLMs, aiming to filter out those with purely educational motives and from answering questions about LLMs in work environments.

Our objective was to understand who is involved in LLM evaluation, determining whether their motivation is primarily educational, work-related, or a combination of both. Additionally, we aimed to assess their current practices in conducting LLM evaluation, which includes examining the technology and methods used, as well as identifying any obstacles they face.

Limitations

This survey is not scientific and does not claim to be a representative sample of the MLOps engineering market. As an exploratory study, it serves purely as a foundation for future research. Another limitation of this research is its response rate. Between February 15th and March 8th, we collected a total of 132 responses and rejected 2 responses, which were conducted as tests before the official launch on February 15th.

Audience and Participation

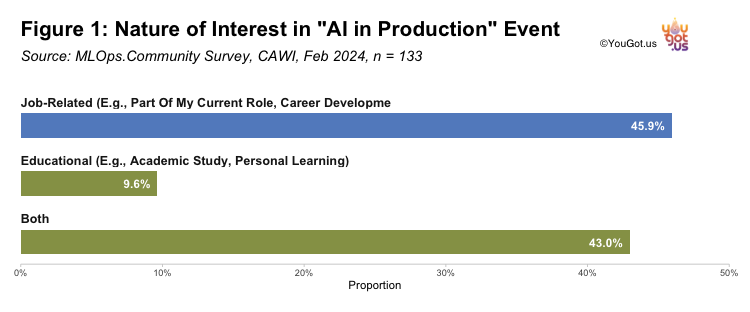

When survey participants were asked, “Is your interest in Large Language Models (LLMs) primarily job-related or for educational purposes?” at the “AI in Production” event, the majority cited job-related reasons (45.4%). A closely comparable number indicated that their interest served both job-related and educational purposes (43.8%), while a smaller segment attended the event mainly for educational reasons (9.2%). This suggests a strong focus on applying AI insights in professional contexts, as well as a significant interest in leveraging such events for broader learning and development.

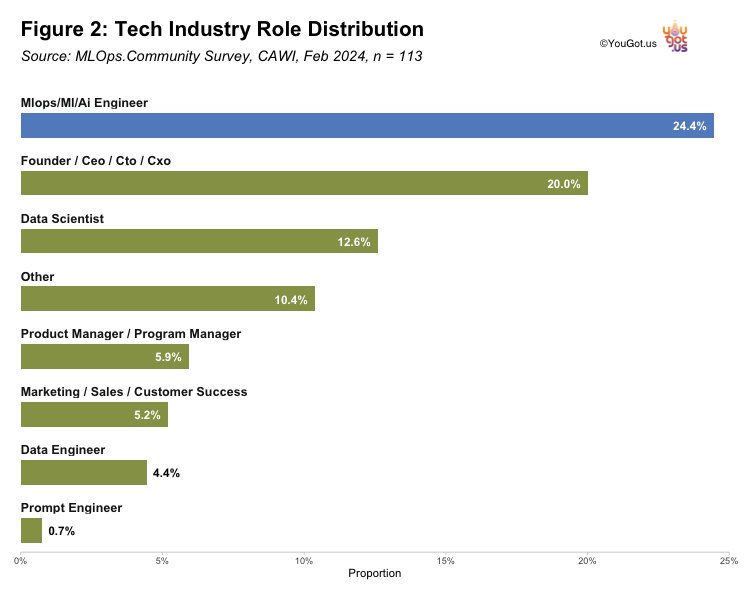

The distribution of job roles among survey respondents highlights a diverse range of positions with a pronounced lean towards high-level and technical roles within machine learning operations, data science, and AI sectors. This trend aligns with expectations considering the survey’s focus area. The notable presence of C-level executives and founders suggests substantial engagement from the startup ecosystem. This aspect of the data is particularly intriguing and warrants further exploration to understand the impact of these roles on the survey’s outcomes and to discern any potential biases this may introduce, given the entrepreneurial nature of startups.

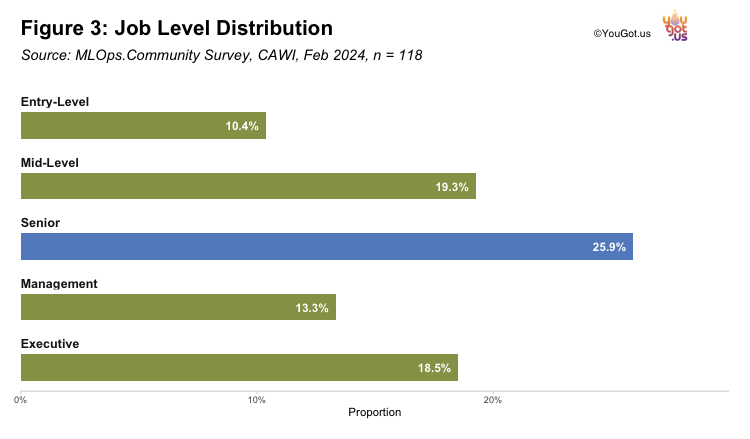

The survey’s job level distribution reveals a significant representation of senior roles at 24.6% and an unexpectedly high percentage of executives at 19.2%. This could be indicative of a substantial presence of startup founders among the respondents, which may have influenced the results. The prominence of mid-level positions at 20.0% further underscores the experience level of the community. The lower proportion of entry-level roles at 10.8% suggests that the sector is currently driven by those with more advanced careers. The data points to a need for a deeper analysis to understand the influence of startup culture on the executive representation within this community.

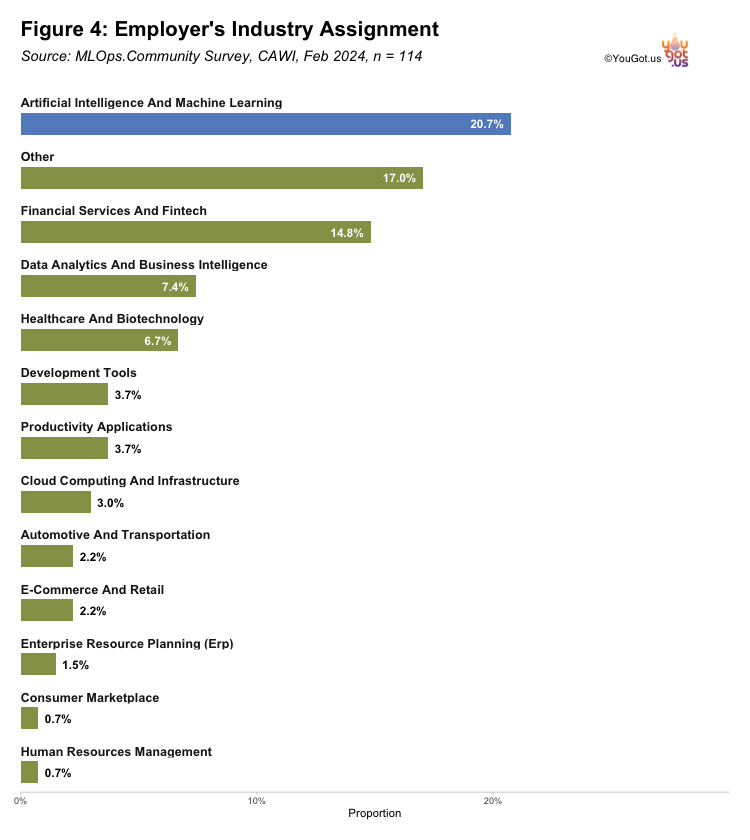

Our results highlight that the majority of respondents currently place their employers within the Artificial Intelligence and Machine Learning industry. This aligns with expectations given the our focus and the nascent stage of Large Language Model (LLM) integration across various sectors. The prominent positioning of AI and Machine Learning, at 21.5%, is indicative of the sector’s current momentum and the high interest in these technologies. However, considering the relatively small representation of industries such as E-Commerce and Retail in the survey, there is a strong implication that we are on the cusp of a significant shift. As LLMs become more widely adopted, it is anticipated that the distribution of industries will diversify, with sectors such as E-Commerce, Retail, and others potentially seeing a substantial increase in the integration of advanced data analytics and machine learning capabilities. This survey serves as a snapshot, suggesting that the landscape of employer industries is poised for considerable change as LLMs permeate more deeply into the broader market.

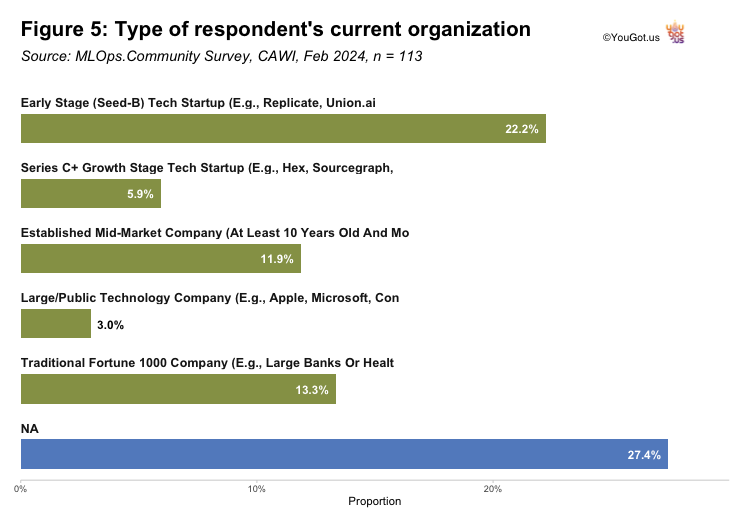

Respondents were asked “How would you characterize your current organization?” to determine the types of organizations involved in LLM Evaluation as a part of the “AI in Production” event. A plurality falls into the ‘Other’ category at 27.7%, suggesting diverse organizational structures not captured by the given options, potentially including non-profits, government entities, or niche firms. ‘Early Stage (Seed-B) Tech Startups’ represent a significant share at 23.1%, indicating a robust startup presence in the field. Traditional Fortune 1000 companies and established mid-market companies have a notable but smaller representation. The ‘Other’ category’s predominance warrants further investigation to better understand the full spectrum of organizations engaging with AI and ML.

The outcome, with a large proportion of respondents from ‘Other’ types of organizations and many from ‘Early Stage (Seed-B) Tech Startups’, may be somewhat expected given the innovative and rapidly evolving nature of the AI and ML industries. Startups often are at the forefront of adopting and developing new technologies, which might explain their strong representation.

Level of LLM Usage

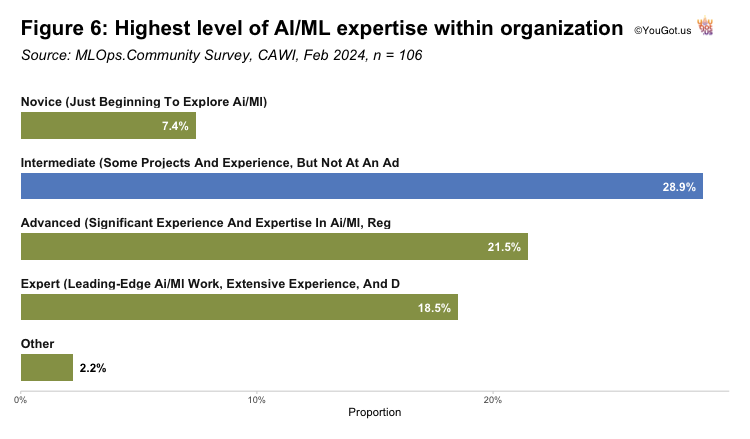

Figure 6 displays the distribution of self-reported AI/ML expertise levels within organizations, based on a survey conducted by MLOps.Community in February 2024. The chart reveals that a significant portion of respondents classify their experience as ‘Intermediate,’ indicating some project involvement without advanced expertise. This is followed by ‘Advanced’ and ‘Expert’ levels, which together comprise nearly 40% of participants, suggesting a substantial level of proficiency in the field. ‘Novice’ and ‘Other’ categories represent smaller fractions, indicating fewer organizations at the initial exploration stage or with non-standard levels of AI/ML expertise.

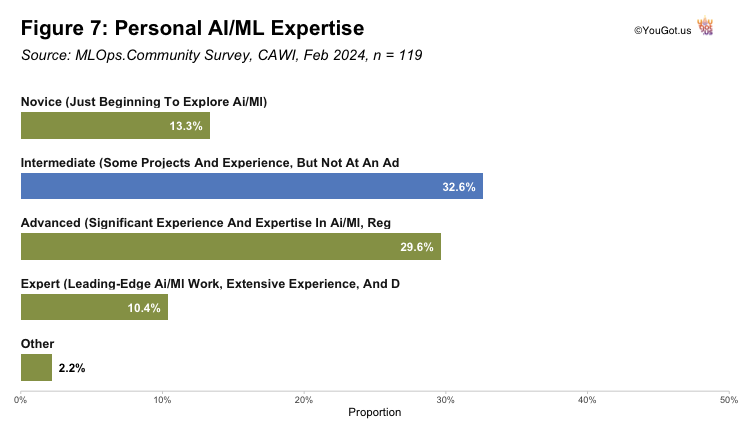

Our data suggests that organizations represented in this survey tend to employ individuals with higher AI/ML expertise. Intermediate expertise levels are similar across personal and organizational contexts, indicating it’s a common developmental stage in AI/ML careers. The higher representation of advanced practitioners and experts within organizations points to a mature workforce capable of handling complex AI/ML tasks. These insights, however, must be contextualized within the potential biases of self-reported data and the differences in sample sizes.

State of LLM Evaluation

(Register above and get notified when it becomes available)

Conclusions

(Register above and get notified when it becomes available)