The Event

On February 15 and 22, 2024, MLOps Community hosted a global, two-day virtual event about using Large Language Models (LLMs) in production. The event had 100 speakers and over 5,000 attendees. They covered topics like cost management, latency requirements, reliability, and debugging. Attendees could also join workshops to learn how to set up LLM use cases more efficiently. For more information: https://home.mlops.community

Introduction and Methodology

Christina Garcia from the YouGot.us Research Project and Demetrious Brinkmann from MLOps Community designed and ran an online survey during this event. The survey explored how people are using and evaluating LLMs. We used a Computer-Assisted Web Interview (CAWI) approach for convenience and to keep costs low.

The survey had three sections:

General Questions

Level of LLM Usage

State of LLM Evaluation

We also screened out people who were only learning LLMs for personal interest, so we could focus on those using them at work or at least partly for work.Limitations

Important Note: This was an exploratory survey with limited scope. It is not a representative sample of the entire MLOps market. From February 15 to March 8, we got 132 total responses and rejected 2 test responses.

Audience and Participation

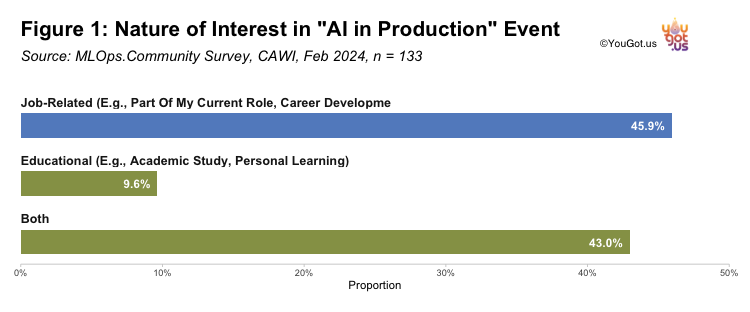

When we asked participants whether their interest in Large Language Models (LLMs) was job‐related or educational, 45.4% said they were primarily focused on work applications. Another 43.8% reported a blend of job‐related and educational motivations, and 9.2% leaned mainly toward learning. These findings indicate that most attendees seek practical AI insights for professional use, while still valuing broader skill development opportunities.

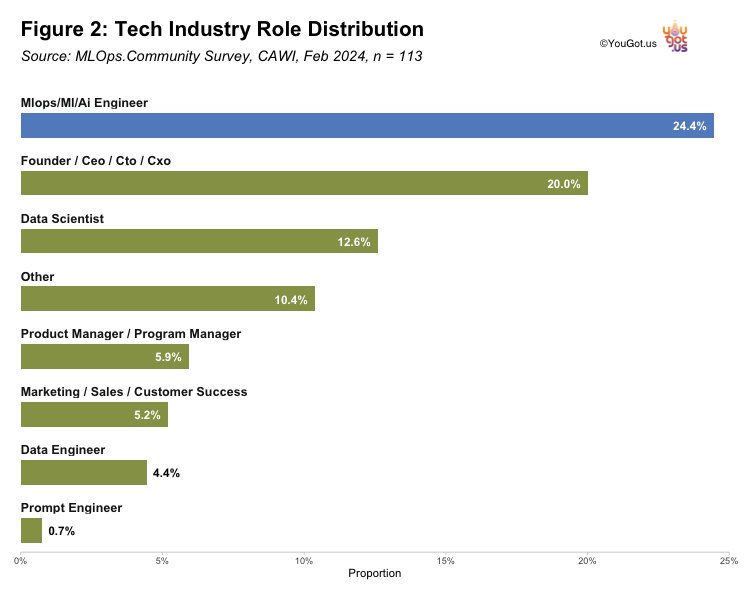

The range of job roles reported by respondents reflects a strong tilt toward higher‐level and technical positions in machine learning, data science, and AI. This aligns with the event’s emphasis on applied AI. Of particular note is the sizeable group of C‐suite executives and founders, suggesting robust startup involvement. Their presence raises interesting questions about how leadership perspectives may shape survey responses, and it points to potential biases tied to the entrepreneurial nature of these companies.

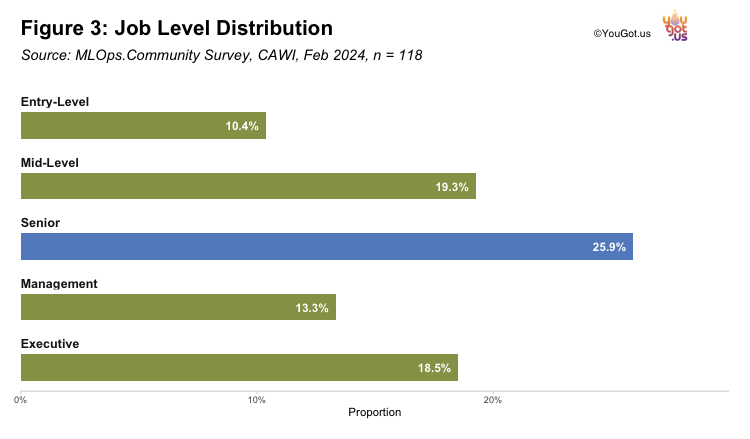

Senior roles account for 25.9% of respondents, and executives comprise 18.5%—higher than one might expect in a more traditional setting. This suggests a notable startup founder presence that could shape the survey’s overall results. Meanwhile, ~ 20% identify as mid‐level, reflecting a relatively seasoned community, and only 10.4% are entry‐level. These numbers hint that higher‐level professionals drive much of the activity in this space. Further analysis could clarify how startup culture influences the strong executive turnout.

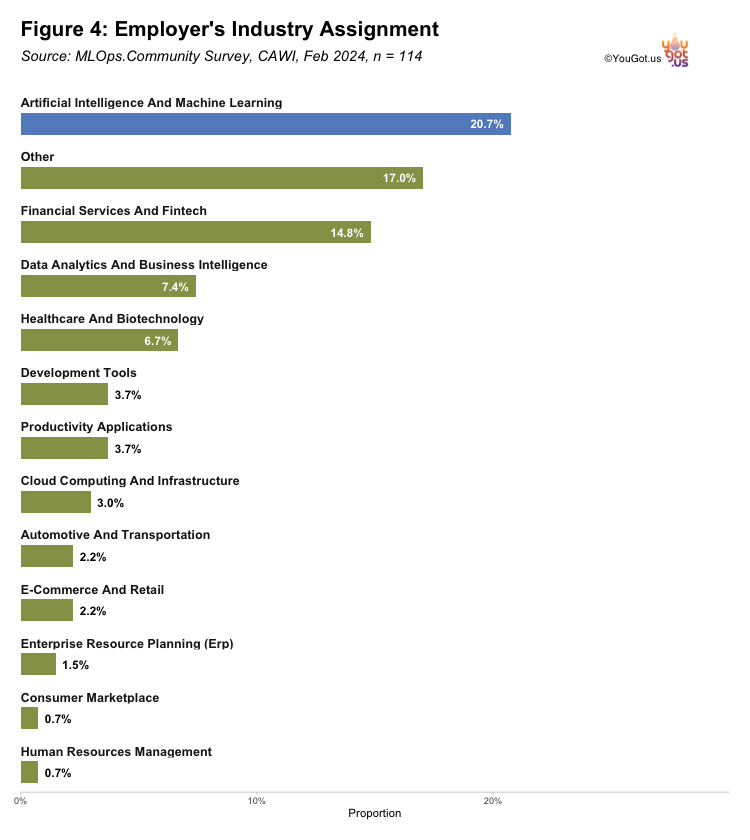

Our results show that the largest share of respondents (20.7%) work in the Artificial Intelligence and Machine Learning industry. This is not surprising given the survey’s LLM focus and the overall early stage of LLM adoption across different fields. The strong AI/ML representation suggests a high level of interest in these technologies and reflects the sector’s current momentum. At the same time, industries like E‐Commerce and Retail remain relatively underrepresented, which hints that broader adoption may still be on the horizon. As LLMs become more commonplace, it’s likely we’ll see greater diversification, with additional sectors—such as E‐Commerce, Retail, and beyond—integrating advanced analytics and machine learning. Overall, these findings offer a snapshot of an evolving market that is poised to change considerably as LLMs gain wider traction.

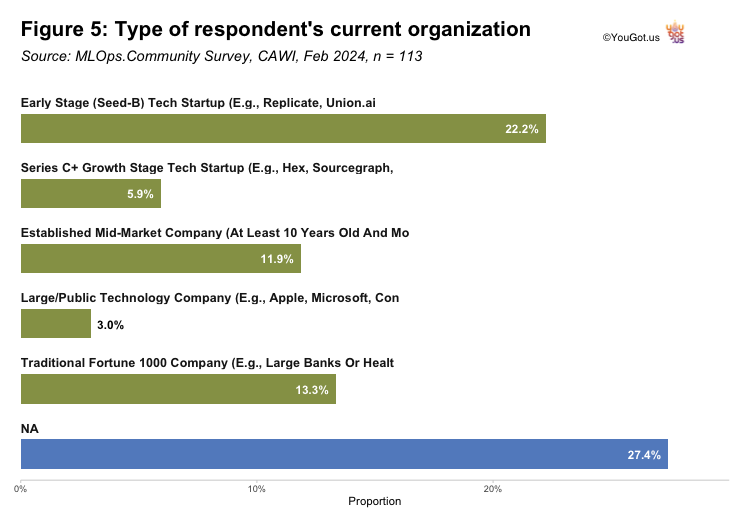

Respondents were asked, “How would you characterize your current organization?” to understand which types of businesses are actively evaluating LLMs at the “AI in Production” event. A plurality (27.4%) selected “Other,” suggesting a range of organizational structures not covered by the standard categories (e.g., nonprofits, government agencies, or niche firms). Meanwhile, Early Stage (Seed-B) Tech Startups made up 22.2%, highlighting a strong startup presence. Traditional Fortune 1000 companies (13.3%), established mid-market firms (11.9%), and Series C+ Growth Stage Tech Startups (5.9%) each contributed smaller portions. Large or publicly traded tech companies accounted for only 3.0%.

Overall, the higher concentration in “Other” and Early Stage categories aligns with the rapidly evolving nature of AI and ML, where new or unconventional organizations often explore emerging technologies first. These results underscore the diverse array of participants engaging with LLMs, ranging from small startups to large enterprises, though startups appear to be at the forefront of adoption.

Level of LLM Usage

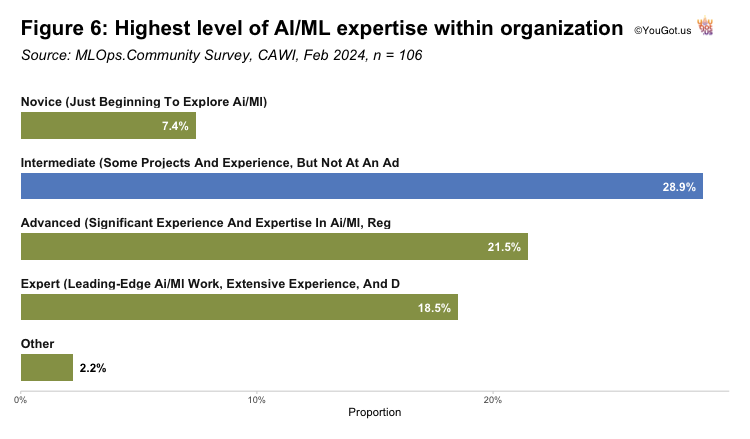

Figure 6 displays the distribution of self-reported AI/ML expertise levels within organizations, based on a survey conducted by MLOps.Community in February 2024. The chart reveals that a significant portion of respondents classify their experience as ‘Intermediate,’ indicating some project involvement without advanced expertise. This is followed by ‘Advanced’ and ‘Expert’ levels, which together comprise nearly 40% of participants, suggesting a substantial level of proficiency in the field. ‘Novice’ and ‘Other’ categories represent smaller fractions, indicating fewer organizations at the initial exploration stage or with non-standard levels of AI/ML expertise.

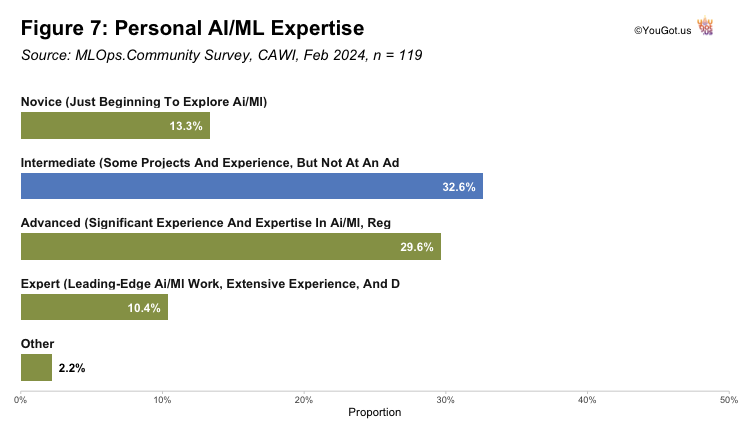

Our data suggests that organizations represented in this survey tend to employ individuals with higher AI/ML expertise. Intermediate expertise levels are similar across personal and organizational contexts, indicating it’s a common developmental stage in AI/ML careers. The higher representation of advanced practitioners and experts within organizations points to a mature workforce capable of handling complex AI/ML tasks. These insights, however, must be contextualized within the potential biases of self-reported data and the differences in sample sizes.

State of LLM Evaluation

(Register above and get notified when it becomes available)

Conclusions

(Register above and get notified when it becomes available)

About MLOps Community and YouGot.us Research

YouGot.us Research is a distinguished organization focusing on AI/ML and Data Science industries. Known for its thorough data research projects and perceptive analyses, it stands as a premier resource for research initiatives in the emerging Generative AI business place. The MLOps Community, a pivotal partner of YouGot.us, stands at the forefront of addressing the growing importance of MLOps, distinct yet parallel to DevOps, by facilitating the exchange of real-world best practices among professionals in the arena.

The Questionnaire

Citation

@online{garcia2024,

author = {Garcia, Christina},

title = {AI in {Production}},

date = {2024-11-02},

url = {https://yougot.us/news/2024-11-02-AI-in-Production/},

langid = {en}

}